We are nearing the tipping point for AI-first customer service.

Generative AI announcements are at a fever pitch, with a new advancement or partnership coming every day. In this blog, I’ll tell you how the last few months of GenAI development will completely re-shape customer conversation and engagement forever.

The lay of the land

- Release of GPT-4o: The Omni model from OpenAI can handle text, audio, and video in one go, making conversations with AI agents easier and more natural than ever before.

- Apple and OpenAI: This partnership brings ChatGPT to Apple experiences, massively expanding the availability of Omni-like advancements in one fell swoop.

- Call Arc: A crop of new generative AI-powered apps are bringing AI into consumers’ daily lives. Companies like Perplexity and Arc are poised to re-invent current consumer norms. For example, the new Call Arc feature places traditional interactions, like picking up the phone, within the net of AI automation.

The explosion in this space shows us that UX for CX – the user experience for customer experience – is about to see a generational change.

Technology interfaces continue to gravitate towards more natural interactions, from voice-oriented personal assistants like Siri and Alexa, to hands-free automation for cars, to chatting with LLM-powered AIs. Consumers are becoming more and more accustomed to taking action by talking, not clicking buttons. As this trend becomes the norm, businesses will see an accelerated increase in conversations with their customers, and current deflection models will get upended by more natural, easy-to-use methods of interaction. Ultimately, conversations with both personal AI assistants and company AI agents will become as easy as talking to a friend.

We see it happening in this latest round of generative AI announcements: the underlying technology, and its distribution, has finally reached the point where businesses can shift to a new engagement mindset of customer service that’s completely AI-first.

Why Parloa’s approach to GPT-4o is different

The recent announcement of the release of OpenAI’s GPT-4o is bringing us to the brink of this new AI-first customer service world. And at Parloa, we’re focused on bringing these advancements to our customers and partners – which is why we’re already using GPT-4o.

GPT-4o is unlike previous models in that it is a true omnimodel or multimodal model, capable of handling and understanding information from text, audio, and video. This will allow customers who engage with this model to have more natural and intuitive interactions, especially in cases where a lot of on-the-fly remixing had to be done from speech to text and back again.

How Parloa is using GPT-4o

As soon as it was released, we started testing, evaluating, and integrating GPT-4o into our AI platform so customers didn’t have to make any changes on their own — ensuring enterprises could safely leverage this industry-changing new model for customer service use cases. Our strategic partnership with Microsoft allowed us to be one of the first companies in the world to provide GPT-4o on Azure to our customers, which offers additional layers of enterprise-grade security, data privacy, and compliance standards for AI initiatives.

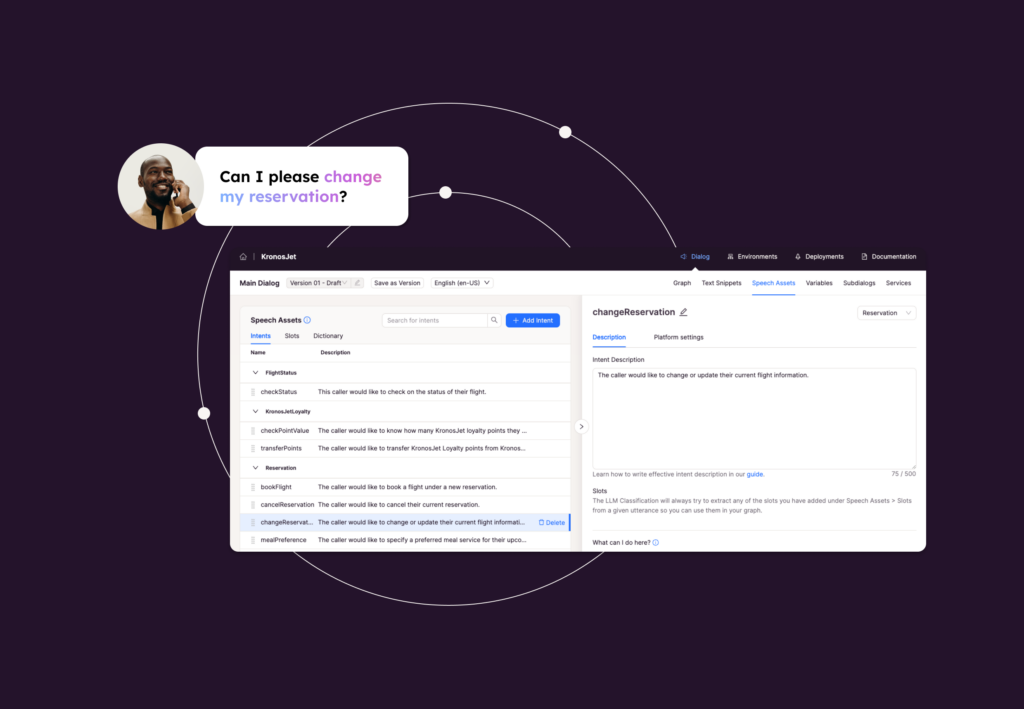

Identify caller intent and automate responses to FAQs: Parloa customers are leveraging the text-to-text version of GPT-4o with our Intent Skill, to accurately identify caller intent to connect them to the right resource, and Knowledge Skill, to automate answering frequently-asked questions.

This guarantees Parloa customers can continue to take customer service to new heights with:

Superior customer experiences: Every time a new model is released, we run a comprehensive suite of tests to evaluate the new model’s quality and speed, with extensive simulations to benchmark latency and accuracy against all models available across the AI landscape. For example, with the release of GPT-4o, we conducted thorough simulations to benchmark the latest model against GPT-4 Turbo, where we found that the latest version of GPT-4o improves latency on average by 29.2% in generating responses. We’re thrilled to bring these improvements to our customers, ensuring that they always have access to the best-in-class technology, which, in this case, decreases latency to deliver evermore natural conversation.

Enterprise-grade security and compliance: By providing GPT-4o on Azure, Parloa allows customers to take advantage of the enhanced security while giving them peace of mind that every customer interaction is isolated, and company data and conversations aren’t used to train public LLMs. In other words, your customer data isn’t training OpenAI’s latest model.

- How do we ensure this? Your customer data exists in our private Azure instance, without ever blending with another company. Fully siloed, fully secure, so you can be fully confident that your customer data and conversations are never exposed. Then, we build on the enterprise-grade security and privacy foundation of Azure OpenAI with extensive testing of every LLM released to ensure our customers can access the most secure, powerful, and accurate models available. (Reach out to learn more!)

Better conversations with faster response times: Customers will experience conversations with AI Agents that mimic normal human interactions (especially with measurably reduced latency in response times). This allows companies to ease reliance on intermediate responses, which can become repetitive in situations with multiple questions and moving parts, to elegantly handle any delay due to the model generating a response.

The best tech, no matter what: We are continuing to benchmark and evaluate other versions of GPT-4o, including the end-to-end audio version model that will be released later this year. We do this to ensure that customers are never locked into a model, and all the context of a conversation is fed to the best model, which must be safe and reliable for customer-service-specific use cases while supporting the relevant languages of service.

How brand interactions will change

We expect omnimodels to continue to have major implications for how companies converse with their customers. It will elevate both the quality and approachability of engaging with brands.

Background noise: A parent calls a doctor’s office to change an appointment with a child crying in the background. AI can handle that, sifting out a toddler’s voice to only focus on the information coming from the parent.

Customer context: A person is speaking with an AI Agent as an ambulance goes by on the street. Instead of interpreting the siren as a part of the call, the AI Agent will be able to stop and say: “Sorry, I was not able to understand you just then. Can you repeat that for me?”

Accessibility: A deaf person needs to make an insurance claim. Instead of relying only on chat, they are able to video call their insurance provider and speak with sign language.

Let's go!

As the capabilities of foundation models grow and partnerships are forged between services, customer service will be a first-mover in adopting this technology, and contribute significantly to the evolution of the AI-first engagement model on every level. This will become more and more urgent to meet future customer expectations. Parloa will be there for you, every step of the way.

Contact us to see how you can accelerate the power of AI in your contact center.

Maik Hummel is the Lead AI Evangelist at Parloa and a passionate advocate for AI agents. He has worked in the Conversational AI space for the past six years, focusing on developing AI solutions that enhance customer service and drive Parloa’s GenAI-first strategy. Maik combines his technical expertise with an entrepreneurial spirit, supporting colleagues, customers, and partners in embracing the benefits of Generative AI.